L3C AI Briefing

Save the day: 21 May 2020, Glasgow, UК Over the course of the last year, L3C has established a fruit...

Benchmark Study by Imagga/L3C

Large Model Support for Semantic Segmentation of Cityscape and Waste Images

Download study here

Study overview

Datasets and CNNs

Hardware overview

Benchmarks

Conclusions

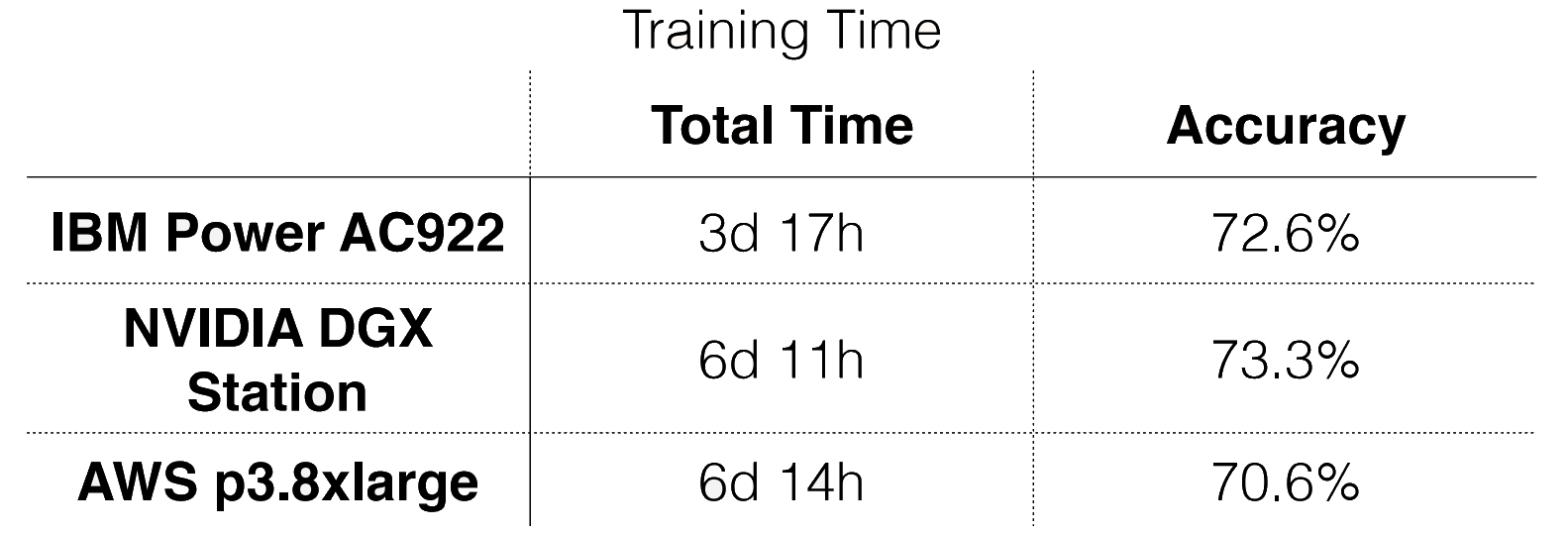

In this study we wanted to benchmark and compare the IBM Power AC922 server vs the NVIDIA DGX Station vs the Amazon Web Services p3.8xlarge instance type for state-of-the-art deep learning training.

Image classification with very large number of categories and object recognition were considered, including PlantSnap plant recognition classifier with over 320K plant species, however, we also wanted to use publicly available data sets for this benchmarking and 3D medical image processing was extensively benchmarked by other analysts.

We finally decided to focus this benchmarking study of IBM Power AC922 vs NVIDIA DGX Station vs Amazon Web Services p3.8xlarge instance on the task of semantic segmentation of (a) cityscape images using the Cityscape data set and (b) of waste in the wild using the TACO data set using IBM’s Large Model Support.

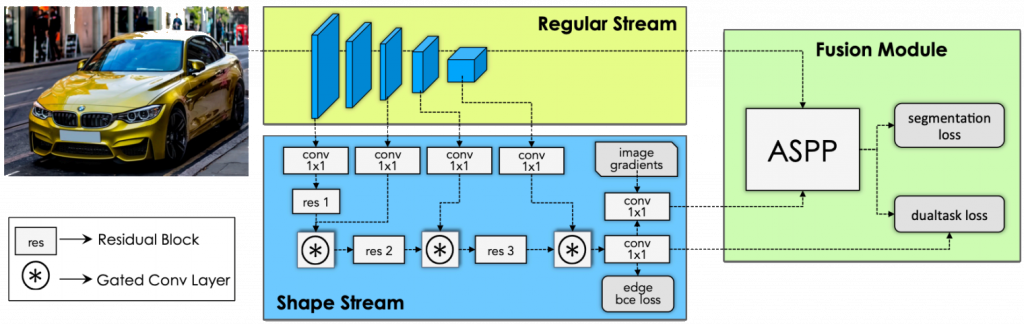

For the choice of a neural network we were looking for a hardware demanding architecture which uses high-resolution input images for training. Gated Shape CNN – a state-of-the-art CNN architecture one of the top methodologies in the Cityscape benchmark, is perfect candidate for benchmarking the performance.

+ Street photos from 50 cities (cityscapes)

+ Several months (spring, summer, fall), daytime

+ Good/medium weather conditions

+ Manually selected frames

+ Large number of dynamic objects

+ Varying scene layout

+ Varying background

+ 5000 annotated images with fine annotations

+ 20000 annotated images with coarse annotations

+ Very challenging data set for semantic segmentation

+ Various applications such as autonomous cars and driving

+ Semantic image segmentation is one of the most widely studied problems in computer vision and image analysis with applications in autonomous driving, 3D reconstruction, medical imaging, image generation, etc.

+ State-of-the-art approaches for semantic segmentation are predominantly based on Convolutional Neural Networks (CNN).

+ Recently, dramatic improvements in performance and inference speed have been driven by new architectural designs

State-of-the-art CNN architecture, achieving 82.8% IoU score on the Cityscapes dataset

Originally trained on a NVIDIA DGX Station 2 with 8 NVIDIA Tesla V100

Trained with batch size of 16 – 2 per each GPU

Trained for 175 epochs and high-resolution input size of 800×800

Download study here

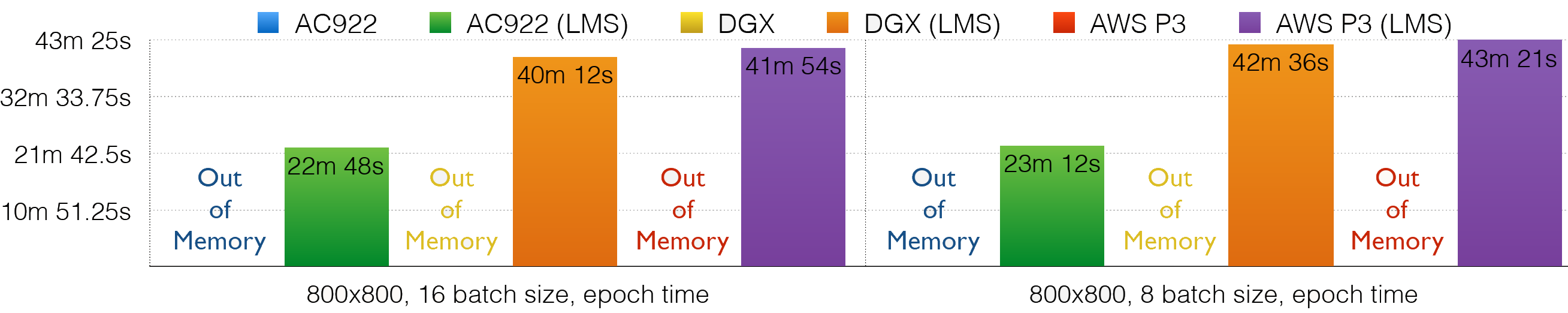

Аllows the successful training of deep learning models that would otherwise exhaust GPU memory and abort without of memory errors. LMS manages this oversubscription of GPU memory by temporarily swapping tensors to host memory when they are not needed.

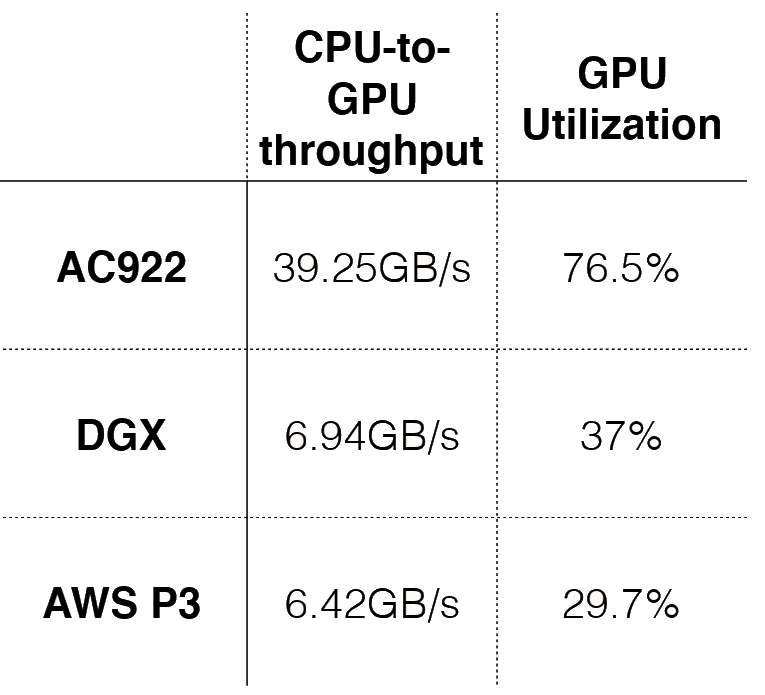

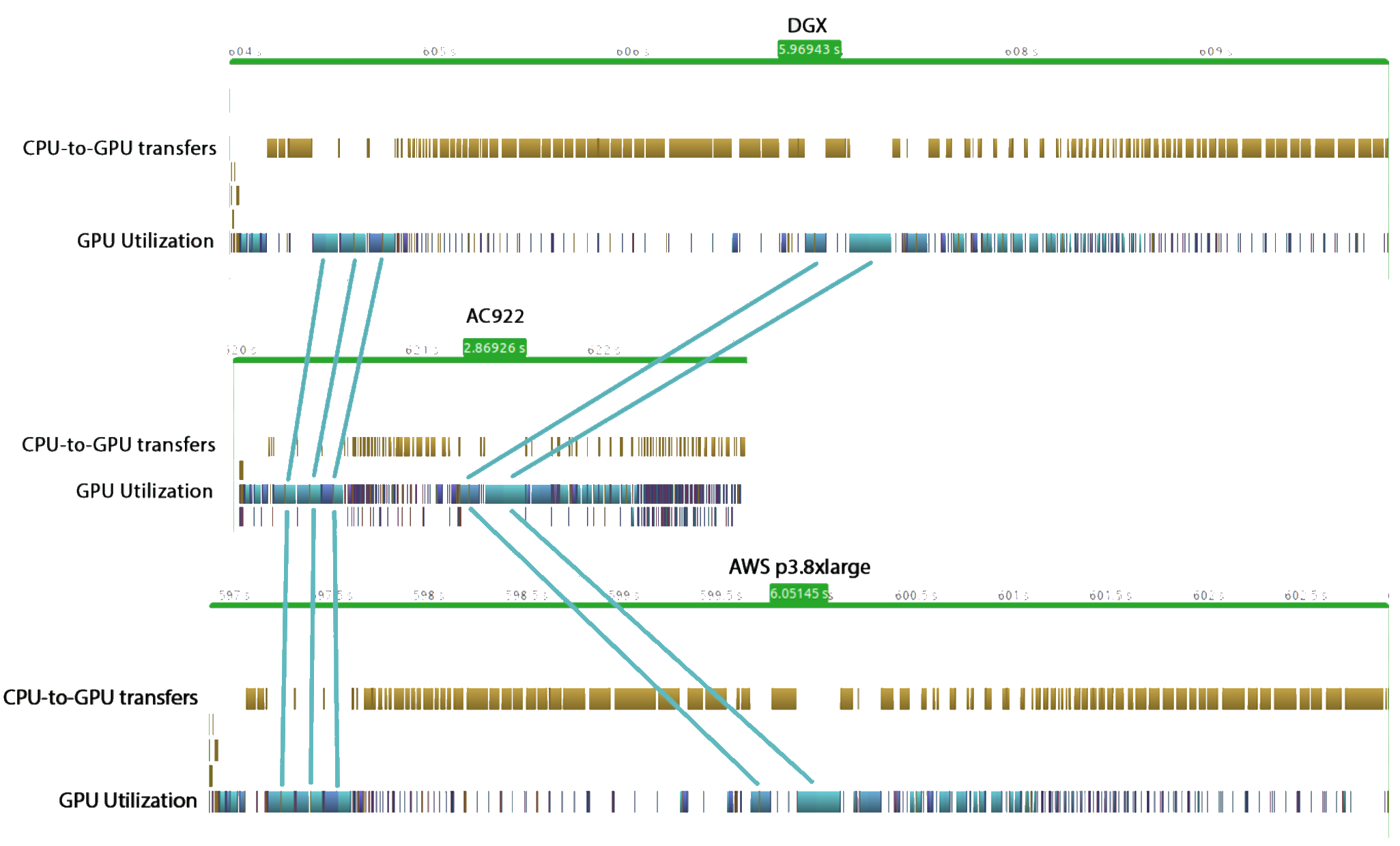

IBM POWER Systems servers (Power8 and Power9 cores) with NVLink technology are especially well-suited to LMS because of their hardware topology that enables fast communication between CPU and GPUs.Тhey include high-speed I/O interfaces like CAPI and PCIe v4.

One or more elements of a deep learning model can lead to GPU memory exhaustion. These include:

+ Model depth and complexity

+ Input data size (e.g. high-resolution images)

+ Batch size

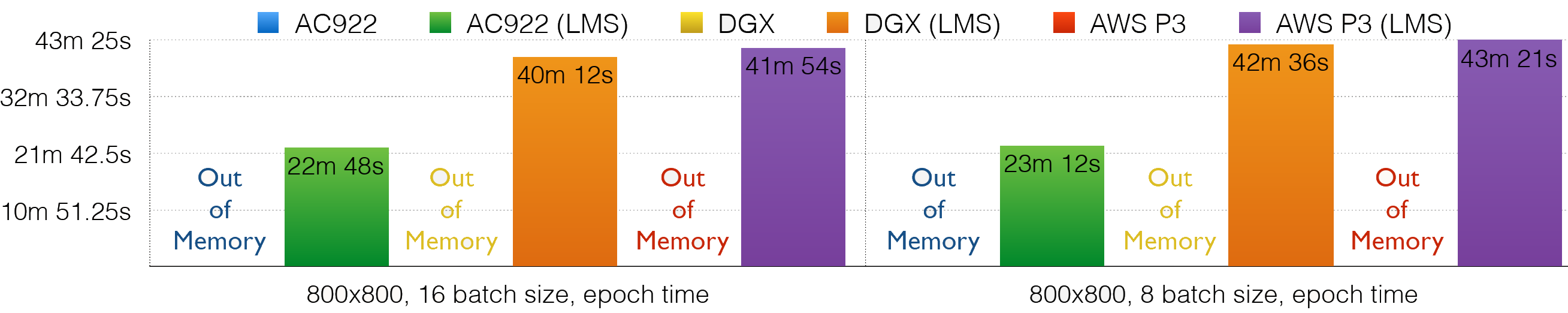

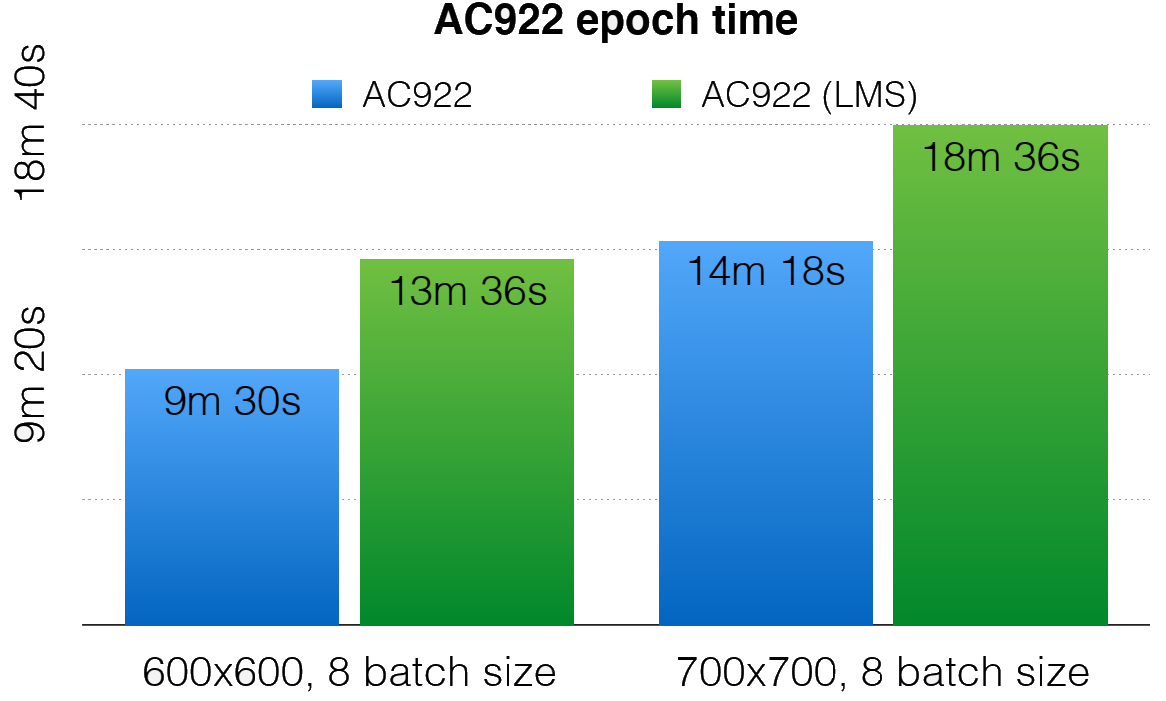

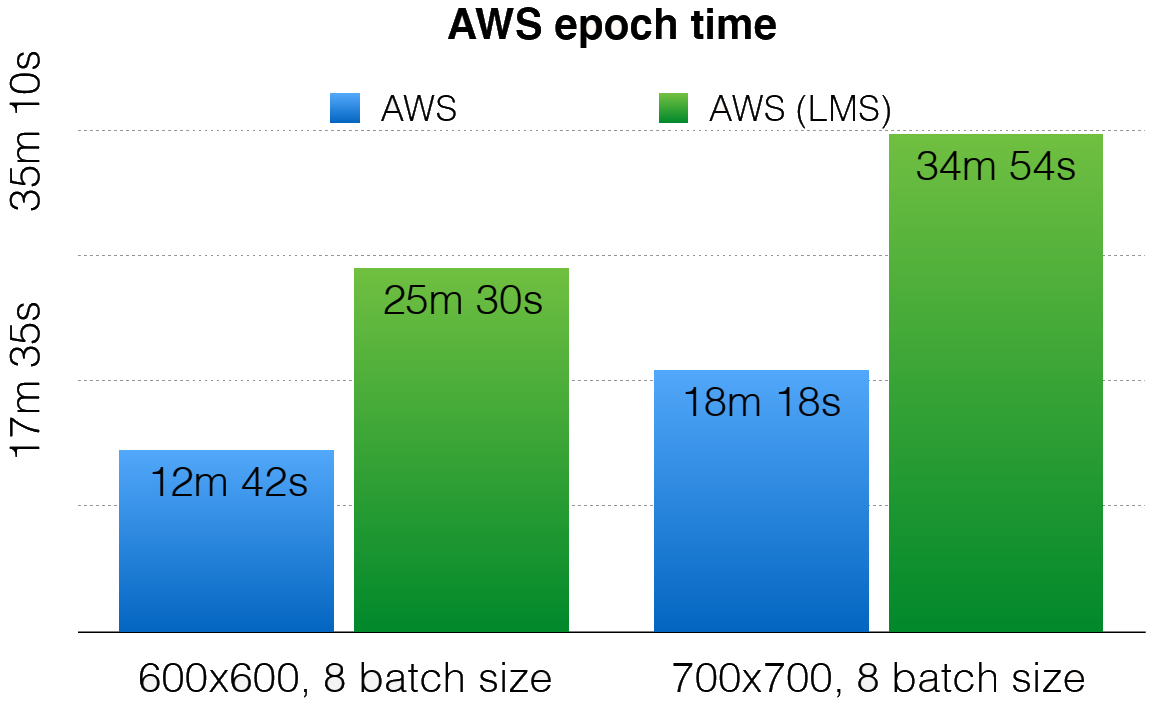

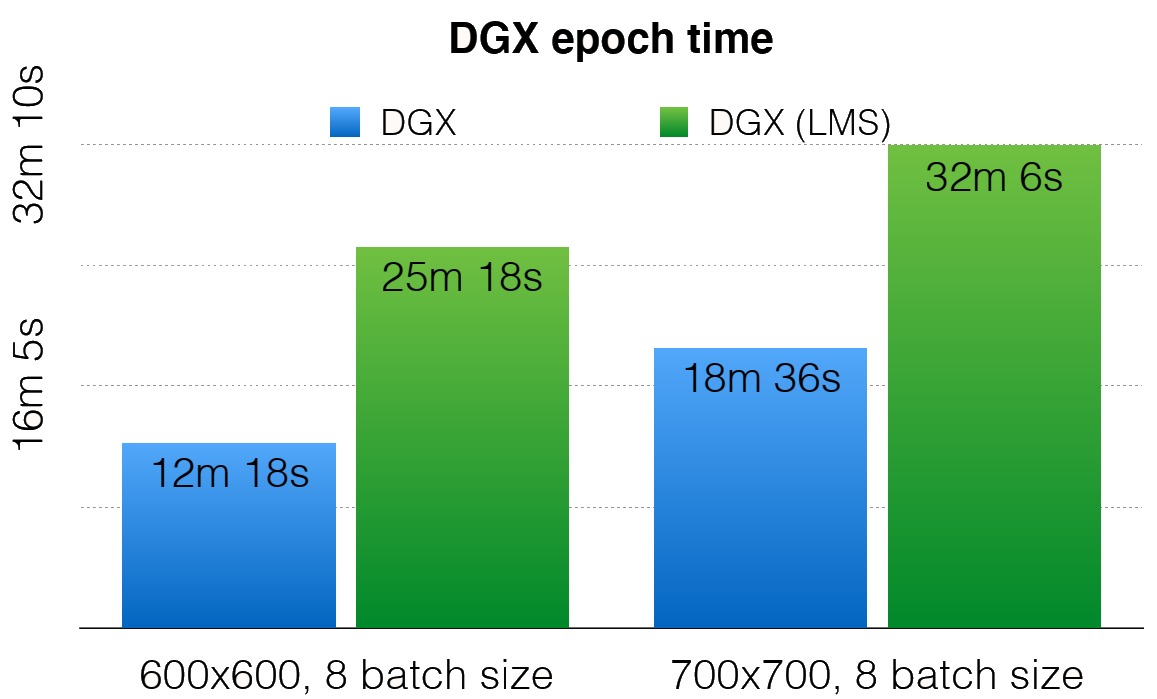

Training time – comparing the training time for 175 epochs on the Cityscape dataset on the AC922, the DGX and the AWS p3.8xlarge instance type

Showcasing what the benefits of using Large Model Support are by demonstrating “Out of Memory”

situations using the G-SCNN architecture and the Cityscape datase

Overviewing the training time overhead when using Large Model Support

GPU profiling – a detailed comparison of the two systems during training using NVIDIA profiling data

Humans have been littering the Earth from the bottom of Mariana trench to Mount Everest. Every minute, at least 15 tonnes of plastic waste leak into the ocean, that is equivalent to the capacity of one garbage truck.

One way to achieve automatic waste segmentation is using the semantic segmentation technology.

We used the TACO dataset for training our waste segmentation model based on the G-SCNN architecture.

The dataset consists of 715 images and 2152 annotations, labeled in 60 categories of litter.

We trained the dataset exclusively on the IBM Power AC922 as it achieved the best performance in our benchmarks.

IBM Power AC922 is significantly faster than NVIDIA DGX Station and the AWS p3.8xlarge instance type in such computationally demanding tasks as semantic segmentation.

Large Model Support enables us to train the model with a larger batch size and input image dimensions producing better overall results.

IBM’s Large Model Support technology has less overhead when used with the IBM Power AC922 hardware, leading to more GPU utilisation and faster training time.

IBM Power AC922 satisfies the hardware requirements for training on complex tasks such as automatic waste segmentation.

Save the day: 21 May 2020, Glasgow, UК Over the course of the last year, L3C has established a fruit...

Save the day: September 2020, London (Royal Society Medicine), UK In 2020, L3C is continuing its eff...

21 – 25 October 2019 | Prague, CZ Join IBM for a fantastic week of training and enablement on IBM...

16 October 2019 | OLYMPIA LONDON, UK In a period of unprecedented change, staying connected to techn...

Linux on Power and L3C As one of IBM’s Power Cloud partners, we have been always on the road of cons...

Most of you now on NVIDIA’s latest development direction – the so called RAPIDS librarie...

In a recent feed, we discussed the latest version of the IBM PowerAI. Now, IBM is announcing a few n...

Radiology is suffering one of the biggest staff shortages in the NHS which is causing patient delays...

IBM launched the latest version of its PowerAI Vision product on 15.03.2019. As most of you know, Po...

L3C were invited to speak at the healthcare strategy forum de-mystifying AI and sharing examples of...

AIX / Solaris / HPUX Cloud At Your Reach

7 Bell Yard, London WC2A 2JR

+44 0203 918 8910

office@l3c.cloud